Visual Instruction Pretraining for Domain-Specific Foundation Models

# IntroductionModern computer vision is converging on a closed loop in which perception, reasoning and generation mutually reinforce each other. However, this loop remains incomplete: the top-down influence of high-level reasoning on the foundational learning of low-level perceptual features is not yet underexplored. This paper addresses this gap by proposing a new paradigm for pretraining foundation models in downstream domains. We introduce Visual insTruction Pretraining (ViTP), a novel approach that directly leverages reasoning to enhance perception. ViTP embeds a Vision Transformer (ViT) backbone within a Vision-Language Model and pretrains it end-to-end using a rich corpus of visual instruction data curated from target downstream domains. ViTP is powered by our proposed Visual Robustness Learning (VRL), which compels the ViT to learn robust and domain-relevant features from a sparse set of visual tokens. Extensive experiments on 16 challenging remote sensing and medical imaging benchmarks demonstrate that ViTP establishes new state-of-the-art performance across a diverse range of downstream tasks. The code is available at GitHub.

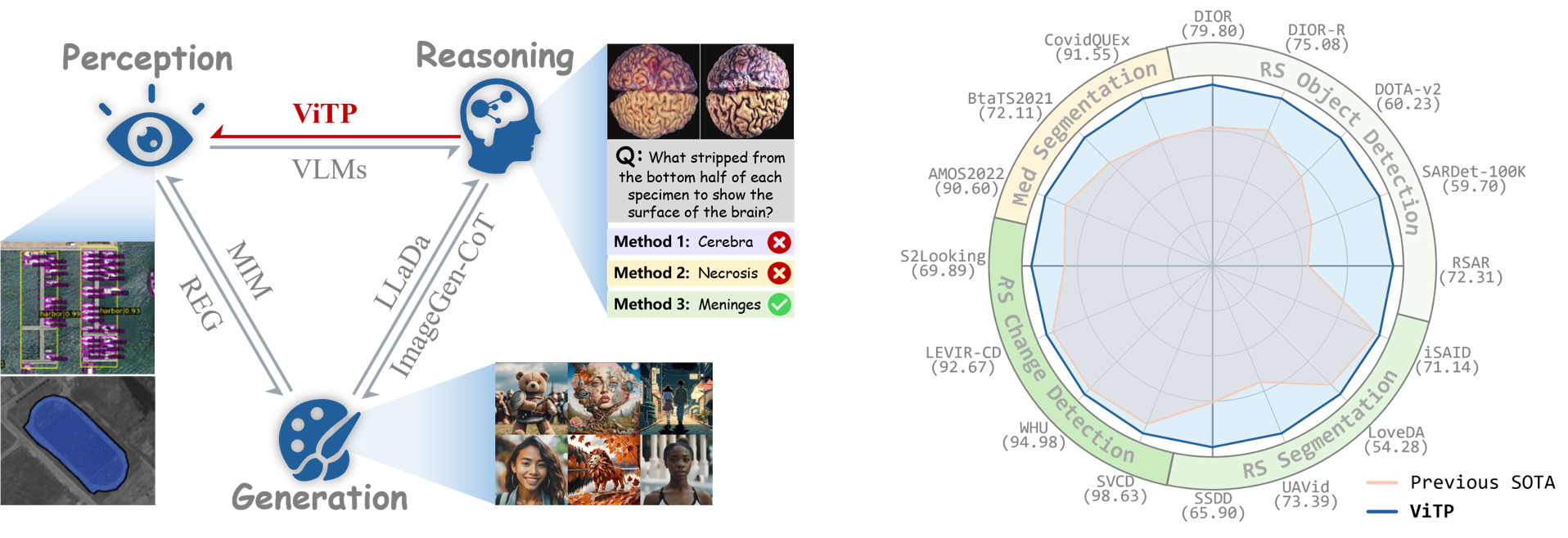

The synergistic relationship between perception, generation, and reasoning in modern CV. Our proposed ViTP forges a novel link from high-level reasoning to low-level perception, a previously underexplored connection. ViTP sets new SOTA performance across a diverse range of downstream tasks in medical imaging and remote sensing.

The synergistic relationship between perception, generation, and reasoning in modern CV. Our proposed ViTP forges a novel link from high-level reasoning to low-level perception, a previously underexplored connection. ViTP sets new SOTA performance across a diverse range of downstream tasks in medical imaging and remote sensing.

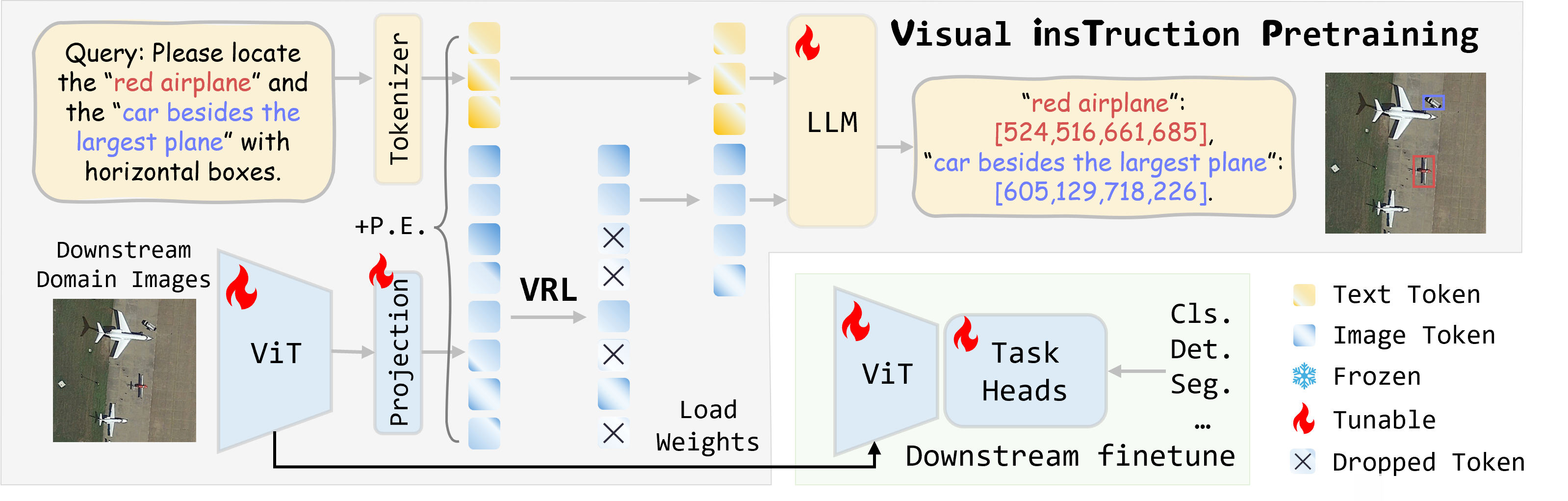

A conceptual illustration of the ViTP framework. A ViT backbone is embedded within a large VLM and then pretrained with domain-specific instruction following objective and Visual Robustness Learning (VRL). This process instils high-level semantic understanding into the ViT. The resulting weights are then used to initialize models for various downstream perception tasks.

A conceptual illustration of the ViTP framework. A ViT backbone is embedded within a large VLM and then pretrained with domain-specific instruction following objective and Visual Robustness Learning (VRL). This process instils high-level semantic understanding into the ViT. The resulting weights are then used to initialize models for various downstream perception tasks.