Spaces:

Runtime error

Runtime error

Commit

·

ef29bef

1

Parent(s):

d7d3089

api

Browse files

app.py

CHANGED

|

@@ -39,7 +39,7 @@ DESCRIPTION = '''

|

|

| 39 |

FOOTER = '<div align=center><img id="visitor-badge" alt="visitor badge" src="https://visitor-badge.laobi.icu/badge?page_id=williamyang1991/VToonify" /></div>'

|

| 40 |

|

| 41 |

ARTICLE = r"""

|

| 42 |

-

If VToonify is helpful, please help to ⭐ the <a href='https://github.com/williamyang1991/VToonify' target='_blank'>Github Repo</a>. Thanks!

|

| 43 |

[](https://github.com/williamyang1991/VToonify)

|

| 44 |

---

|

| 45 |

📝 **Citation**

|

|

@@ -60,7 +60,7 @@ If our work is useful for your research, please consider citing:

|

|

| 60 |

```

|

| 61 |

|

| 62 |

📋 **License**

|

| 63 |

-

This project is licensed under <a rel="license" href="https://github.com/williamyang1991/VToonify/blob/main/LICENSE.md">S-Lab License 1.0</a>.

|

| 64 |

Redistribution and use for non-commercial purposes should follow this license.

|

| 65 |

|

| 66 |

📧 **Contact**

|

|

@@ -77,22 +77,23 @@ def set_example_image(example: list) -> dict:

|

|

| 77 |

return gr.Image.update(value=example[0])

|

| 78 |

|

| 79 |

def set_example_video(example: list) -> dict:

|

| 80 |

-

return gr.Video.update(value=example[0]),

|

| 81 |

-

|

| 82 |

sample_video = ['./vtoonify/data/529_2.mp4','./vtoonify/data/7154235.mp4','./vtoonify/data/651.mp4','./vtoonify/data/908.mp4']

|

| 83 |

sample_vid = gr.Video(label='Video file') #for displaying the example

|

| 84 |

-

example_videos = gr.components.Dataset(components=[sample_vid], samples=[[path] for path in sample_video], type='values', label='Video Examples')

|

| 85 |

|

| 86 |

def main():

|

|

|

|

| 87 |

args = parse_args()

|

| 88 |

args.device = 'cuda' if torch.cuda.is_available() else 'cpu'

|

| 89 |

print('*** Now using %s.'%(args.device))

|

| 90 |

model = Model(device=args.device)

|

| 91 |

-

|

| 92 |

with gr.Blocks(theme=args.theme, css='style.css') as demo:

|

| 93 |

-

|

| 94 |

gr.Markdown(DESCRIPTION)

|

| 95 |

-

|

| 96 |

with gr.Box():

|

| 97 |

gr.Markdown('''## Step 1(Select Style)

|

| 98 |

- Select **Style Type**.

|

|

@@ -102,16 +103,16 @@ def main():

|

|

| 102 |

''')

|

| 103 |

with gr.Row():

|

| 104 |

with gr.Column():

|

| 105 |

-

gr.Markdown('''Select Style Type''')

|

| 106 |

with gr.Row():

|

| 107 |

style_type = gr.Radio(label='Style Type',

|

| 108 |

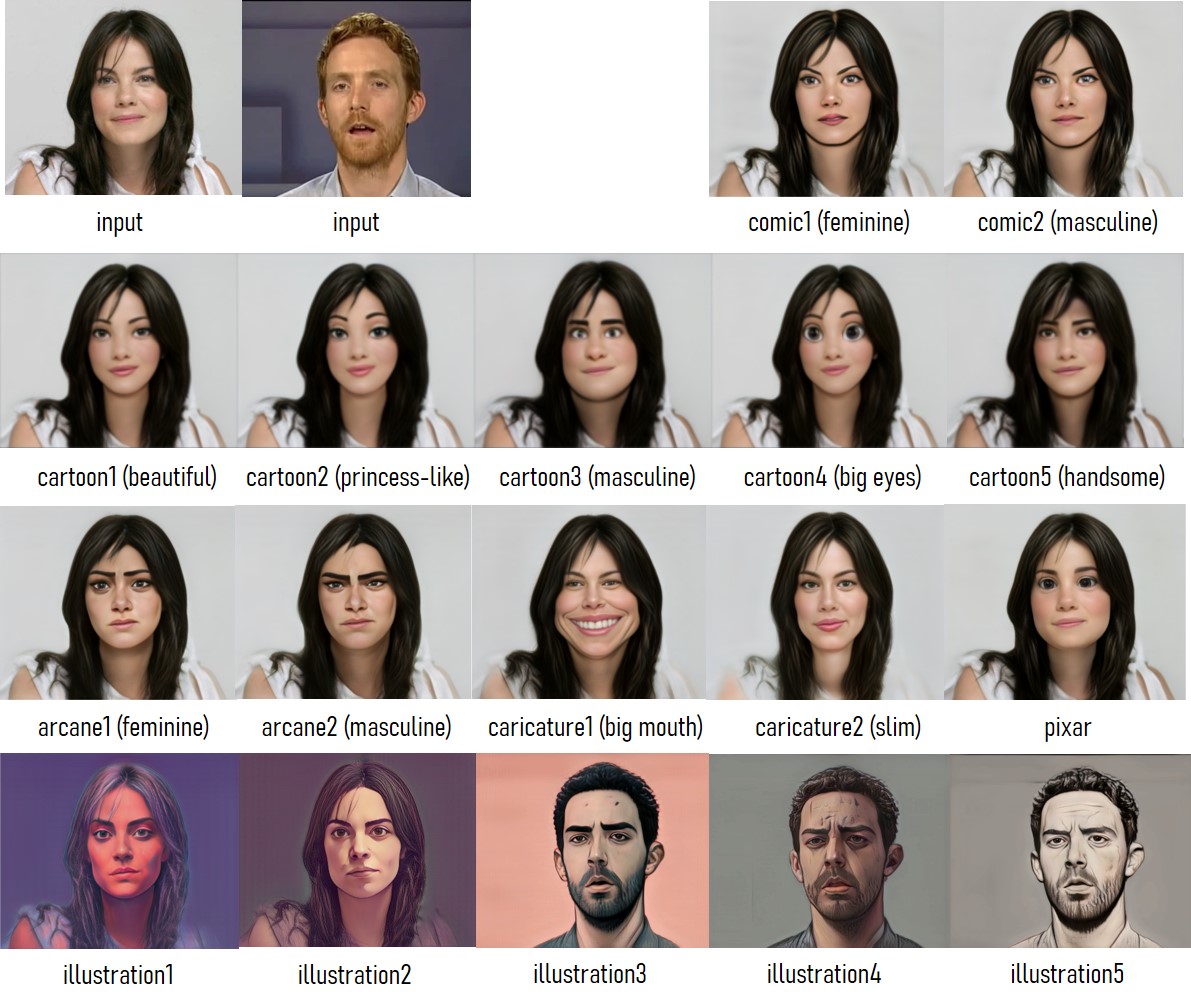

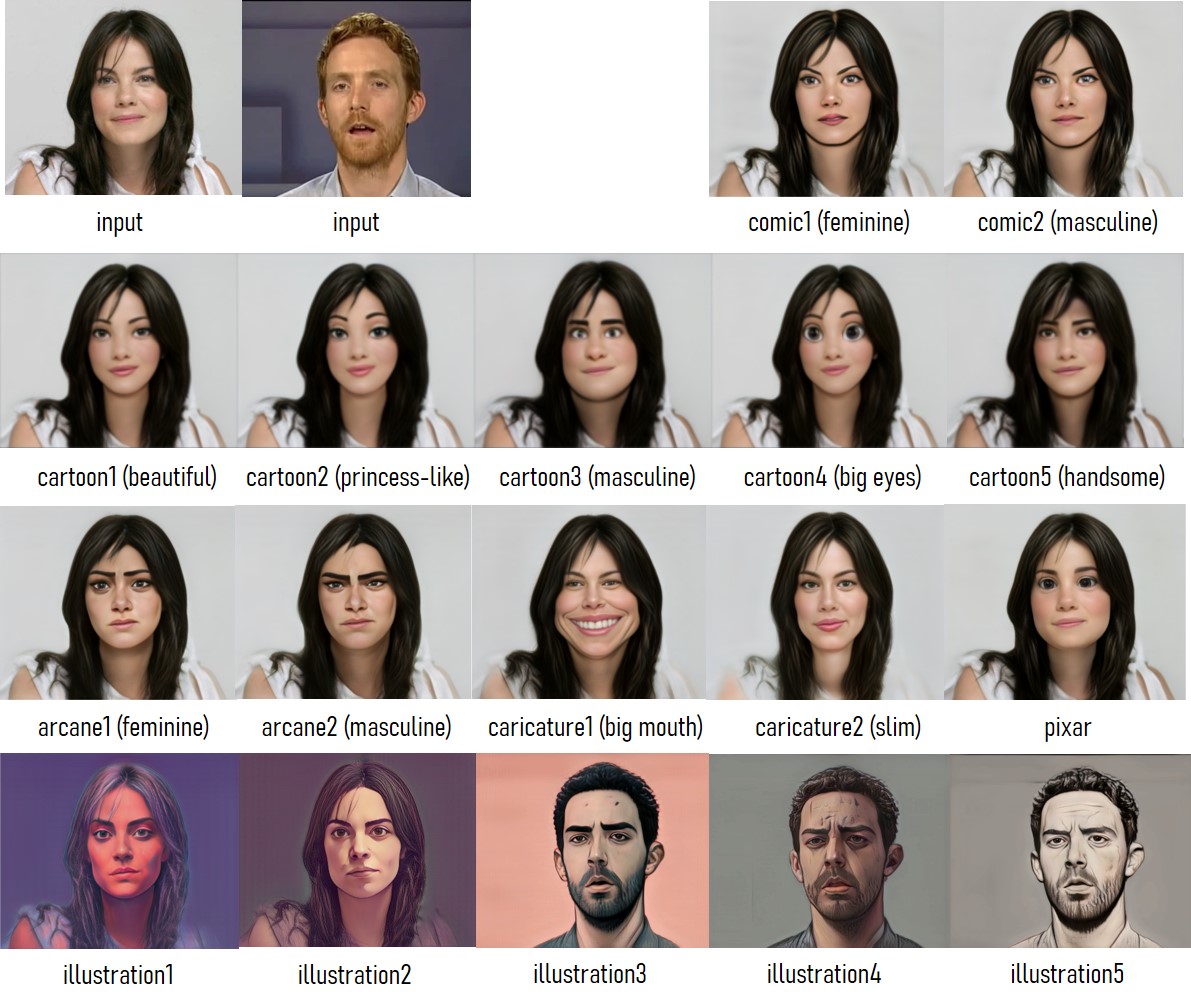

choices=['cartoon1','cartoon1-d','cartoon2-d','cartoon3-d',

|

| 109 |

'cartoon4','cartoon4-d','cartoon5-d','comic1-d',

|

| 110 |

'comic2-d','arcane1','arcane1-d','arcane2', 'arcane2-d',

|

| 111 |

'caricature1','caricature2','pixar','pixar-d',

|

| 112 |

-

'illustration1-d', 'illustration2-d', 'illustration3-d', 'illustration4-d', 'illustration5-d',

|

| 113 |

]

|

| 114 |

-

)

|

| 115 |

exstyle = gr.Variable()

|

| 116 |

with gr.Row():

|

| 117 |

loadmodel_button = gr.Button('Load Model')

|

|

@@ -119,7 +120,7 @@ def main():

|

|

| 119 |

load_info = gr.Textbox(label='Process Information', interactive=False, value='No model loaded.')

|

| 120 |

with gr.Column():

|

| 121 |

gr.Markdown('''Reference Styles

|

| 122 |

-

''')

|

| 123 |

|

| 124 |

|

| 125 |

with gr.Box():

|

|

@@ -161,19 +162,19 @@ def main():

|

|

| 161 |

256,

|

| 162 |

value=200,

|

| 163 |

step=8,

|

| 164 |

-

label='right')

|

| 165 |

with gr.Box():

|

| 166 |

with gr.Column():

|

| 167 |

-

gr.Markdown('''Input''')

|

| 168 |

with gr.Row():

|

| 169 |

input_image = gr.Image(label='Input Image',

|

| 170 |

type='filepath')

|

| 171 |

with gr.Row():

|

| 172 |

-

preprocess_image_button = gr.Button('Rescale Image')

|

| 173 |

with gr.Row():

|

| 174 |

input_video = gr.Video(label='Input Video',

|

| 175 |

mirror_webcam=False,

|

| 176 |

-

type='filepath')

|

| 177 |

with gr.Row():

|

| 178 |

preprocess_video0_button = gr.Button('Rescale First Frame')

|

| 179 |

preprocess_video1_button = gr.Button('Rescale Video')

|

|

@@ -191,7 +192,7 @@ def main():

|

|

| 191 |

with gr.Row():

|

| 192 |

aligned_video = gr.Video(label='Rescaled Video',

|

| 193 |

type='mp4',

|

| 194 |

-

interactive=False)

|

| 195 |

with gr.Row():

|

| 196 |

with gr.Column():

|

| 197 |

paths = ['./vtoonify/data/pexels-andrea-piacquadio-733872.jpg','./vtoonify/data/i5R8hbZFDdc.jpg','./vtoonify/data/yRpe13BHdKw.jpg','./vtoonify/data/ILip77SbmOE.jpg','./vtoonify/data/077436.jpg','./vtoonify/data/081680.jpg']

|

|

@@ -200,15 +201,15 @@ def main():

|

|

| 200 |

label='Image Examples')

|

| 201 |

with gr.Column():

|

| 202 |

#example_videos = gr.Dataset(components=[input_video], samples=[['./vtoonify/data/529.mp4']], type='values')

|

| 203 |

-

#to render video example on mouse hover/click

|

| 204 |

example_videos.render()

|

| 205 |

#to load sample video into input_video upon clicking on it

|

| 206 |

-

def load_examples(video):

|

| 207 |

#print("****** inside load_example() ******")

|

| 208 |

#print("in_video is : ", video[0])

|

| 209 |

return video[0]

|

| 210 |

|

| 211 |

-

example_videos.click(load_examples, example_videos, input_video)

|

| 212 |

|

| 213 |

with gr.Box():

|

| 214 |

gr.Markdown('''## Step 3 (Generate Style Transferred Image/Video)''')

|

|

@@ -224,10 +225,10 @@ def main():

|

|

| 224 |

1,

|

| 225 |

value=0.5,

|

| 226 |

step=0.05,

|

| 227 |

-

label='Style Degree')

|

| 228 |

with gr.Column():

|

| 229 |

gr.Markdown('''

|

| 230 |

-

''')

|

| 231 |

with gr.Row():

|

| 232 |

output_info = gr.Textbox(label='Process Information', interactive=False, value='n.a.')

|

| 233 |

with gr.Row():

|

|

@@ -242,10 +243,10 @@ def main():

|

|

| 242 |

with gr.Row():

|

| 243 |

result_video = gr.Video(label='Result Video',

|

| 244 |

type='mp4',

|

| 245 |

-

interactive=False)

|

| 246 |

with gr.Row():

|

| 247 |

vtoonify_button = gr.Button('VToonify!')

|

| 248 |

-

|

| 249 |

gr.Markdown(ARTICLE)

|

| 250 |

gr.Markdown(FOOTER)

|

| 251 |

|

|

@@ -279,7 +280,7 @@ def main():

|

|

| 279 |

example_images.click(fn=set_example_image,

|

| 280 |

inputs=example_images,

|

| 281 |

outputs=example_images.components)

|

| 282 |

-

|

| 283 |

demo.launch(

|

| 284 |

enable_queue=args.enable_queue,

|

| 285 |

server_port=args.port,

|

|

|

|

| 39 |

FOOTER = '<div align=center><img id="visitor-badge" alt="visitor badge" src="https://visitor-badge.laobi.icu/badge?page_id=williamyang1991/VToonify" /></div>'

|

| 40 |

|

| 41 |

ARTICLE = r"""

|

| 42 |

+

If VToonify is helpful, please help to ⭐ the <a href='https://github.com/williamyang1991/VToonify' target='_blank'>Github Repo</a>. Thanks!

|

| 43 |

[](https://github.com/williamyang1991/VToonify)

|

| 44 |

---

|

| 45 |

📝 **Citation**

|

|

|

|

| 60 |

```

|

| 61 |

|

| 62 |

📋 **License**

|

| 63 |

+

This project is licensed under <a rel="license" href="https://github.com/williamyang1991/VToonify/blob/main/LICENSE.md">S-Lab License 1.0</a>.

|

| 64 |

Redistribution and use for non-commercial purposes should follow this license.

|

| 65 |

|

| 66 |

📧 **Contact**

|

|

|

|

| 77 |

return gr.Image.update(value=example[0])

|

| 78 |

|

| 79 |

def set_example_video(example: list) -> dict:

|

| 80 |

+

return gr.Video.update(value=example[0]),

|

| 81 |

+

|

| 82 |

sample_video = ['./vtoonify/data/529_2.mp4','./vtoonify/data/7154235.mp4','./vtoonify/data/651.mp4','./vtoonify/data/908.mp4']

|

| 83 |

sample_vid = gr.Video(label='Video file') #for displaying the example

|

| 84 |

+

example_videos = gr.components.Dataset(components=[sample_vid], samples=[[path] for path in sample_video], type='values', label='Video Examples')

|

| 85 |

|

| 86 |

def main():

|

| 87 |

+

btn.click(add, [num1, num2], output, api_name="addition")

|

| 88 |

args = parse_args()

|

| 89 |

args.device = 'cuda' if torch.cuda.is_available() else 'cpu'

|

| 90 |

print('*** Now using %s.'%(args.device))

|

| 91 |

model = Model(device=args.device)

|

| 92 |

+

|

| 93 |

with gr.Blocks(theme=args.theme, css='style.css') as demo:

|

| 94 |

+

|

| 95 |

gr.Markdown(DESCRIPTION)

|

| 96 |

+

|

| 97 |

with gr.Box():

|

| 98 |

gr.Markdown('''## Step 1(Select Style)

|

| 99 |

- Select **Style Type**.

|

|

|

|

| 103 |

''')

|

| 104 |

with gr.Row():

|

| 105 |

with gr.Column():

|

| 106 |

+

gr.Markdown('''Select Style Type''')

|

| 107 |

with gr.Row():

|

| 108 |

style_type = gr.Radio(label='Style Type',

|

| 109 |

choices=['cartoon1','cartoon1-d','cartoon2-d','cartoon3-d',

|

| 110 |

'cartoon4','cartoon4-d','cartoon5-d','comic1-d',

|

| 111 |

'comic2-d','arcane1','arcane1-d','arcane2', 'arcane2-d',

|

| 112 |

'caricature1','caricature2','pixar','pixar-d',

|

| 113 |

+

'illustration1-d', 'illustration2-d', 'illustration3-d', 'illustration4-d', 'illustration5-d',

|

| 114 |

]

|

| 115 |

+

)

|

| 116 |

exstyle = gr.Variable()

|

| 117 |

with gr.Row():

|

| 118 |

loadmodel_button = gr.Button('Load Model')

|

|

|

|

| 120 |

load_info = gr.Textbox(label='Process Information', interactive=False, value='No model loaded.')

|

| 121 |

with gr.Column():

|

| 122 |

gr.Markdown('''Reference Styles

|

| 123 |

+

''')

|

| 124 |

|

| 125 |

|

| 126 |

with gr.Box():

|

|

|

|

| 162 |

256,

|

| 163 |

value=200,

|

| 164 |

step=8,

|

| 165 |

+

label='right')

|

| 166 |

with gr.Box():

|

| 167 |

with gr.Column():

|

| 168 |

+

gr.Markdown('''Input''')

|

| 169 |

with gr.Row():

|

| 170 |

input_image = gr.Image(label='Input Image',

|

| 171 |

type='filepath')

|

| 172 |

with gr.Row():

|

| 173 |

+

preprocess_image_button = gr.Button('Rescale Image')

|

| 174 |

with gr.Row():

|

| 175 |

input_video = gr.Video(label='Input Video',

|

| 176 |

mirror_webcam=False,

|

| 177 |

+

type='filepath')

|

| 178 |

with gr.Row():

|

| 179 |

preprocess_video0_button = gr.Button('Rescale First Frame')

|

| 180 |

preprocess_video1_button = gr.Button('Rescale Video')

|

|

|

|

| 192 |

with gr.Row():

|

| 193 |

aligned_video = gr.Video(label='Rescaled Video',

|

| 194 |

type='mp4',

|

| 195 |

+

interactive=False)

|

| 196 |

with gr.Row():

|

| 197 |

with gr.Column():

|

| 198 |

paths = ['./vtoonify/data/pexels-andrea-piacquadio-733872.jpg','./vtoonify/data/i5R8hbZFDdc.jpg','./vtoonify/data/yRpe13BHdKw.jpg','./vtoonify/data/ILip77SbmOE.jpg','./vtoonify/data/077436.jpg','./vtoonify/data/081680.jpg']

|

|

|

|

| 201 |

label='Image Examples')

|

| 202 |

with gr.Column():

|

| 203 |

#example_videos = gr.Dataset(components=[input_video], samples=[['./vtoonify/data/529.mp4']], type='values')

|

| 204 |

+

#to render video example on mouse hover/click

|

| 205 |

example_videos.render()

|

| 206 |

#to load sample video into input_video upon clicking on it

|

| 207 |

+

def load_examples(video):

|

| 208 |

#print("****** inside load_example() ******")

|

| 209 |

#print("in_video is : ", video[0])

|

| 210 |

return video[0]

|

| 211 |

|

| 212 |

+

example_videos.click(load_examples, example_videos, input_video)

|

| 213 |

|

| 214 |

with gr.Box():

|

| 215 |

gr.Markdown('''## Step 3 (Generate Style Transferred Image/Video)''')

|

|

|

|

| 225 |

1,

|

| 226 |

value=0.5,

|

| 227 |

step=0.05,

|

| 228 |

+

label='Style Degree')

|

| 229 |

with gr.Column():

|

| 230 |

gr.Markdown('''

|

| 231 |

+

''')

|

| 232 |

with gr.Row():

|

| 233 |

output_info = gr.Textbox(label='Process Information', interactive=False, value='n.a.')

|

| 234 |

with gr.Row():

|

|

|

|

| 243 |

with gr.Row():

|

| 244 |

result_video = gr.Video(label='Result Video',

|

| 245 |

type='mp4',

|

| 246 |

+

interactive=False)

|

| 247 |

with gr.Row():

|

| 248 |

vtoonify_button = gr.Button('VToonify!')

|

| 249 |

+

|

| 250 |

gr.Markdown(ARTICLE)

|

| 251 |

gr.Markdown(FOOTER)

|

| 252 |

|

|

|

|

| 280 |

example_images.click(fn=set_example_image,

|

| 281 |

inputs=example_images,

|

| 282 |

outputs=example_images.components)

|

| 283 |

+

|

| 284 |

demo.launch(

|

| 285 |

enable_queue=args.enable_queue,

|

| 286 |

server_port=args.port,

|