Hey everyone,

I wanted to share a project I’ve been working on for a while called Pro-RomanAI NovaLab. It’s a local, offline Python-based IDE that connects to any GGUF model using llama.cpp and gives each model its own independent “brain.”

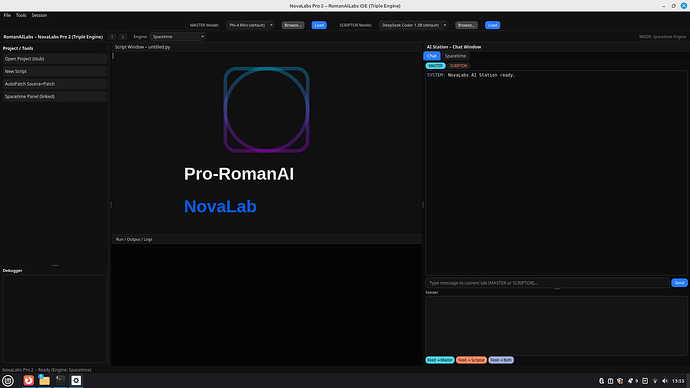

The screenshots show the main interface, which has two separate chat windows:

-

Scriptor (left): a model focused on code patching, line-by-line debugging, and fast technical responses.

-

Master (right): a second model that handles deeper reasoning, architectural insights, and bigger-picture explanations.

Both models run locally, side-by-side, and can be fed the same code to get two perspectives at once.

Below those windows is the Code Feeder, which works like a small built-in editor:

-

It can run syntax checks

-

Highlight errors

-

Auto-patch broken code

-

Refactor sections

-

And feed code chunks to one or both models

The debugger panel (bottom right) shows the model logs, including chunk processing and system information (CPU, RAM, swap usage, etc.). It’s useful for seeing what each engine is doing under the hood.

Nothing here requires an online API — everything runs through local GGUF models of your choice. You can load a small coder model on one side and a larger reasoning model on the other, and they work together in real time.

This isn’t a finished product yet, but it’s becoming a helpful tool for experimenting with local models, coding tasks, and understanding how different models behave when given the same input.

Sharing it here mainly for visibility and feedback.

Thanks for taking a look.

— Daniel (RomanAI Labs)