VAAS: Vision-Attention Anomaly Scoring

Model Summary

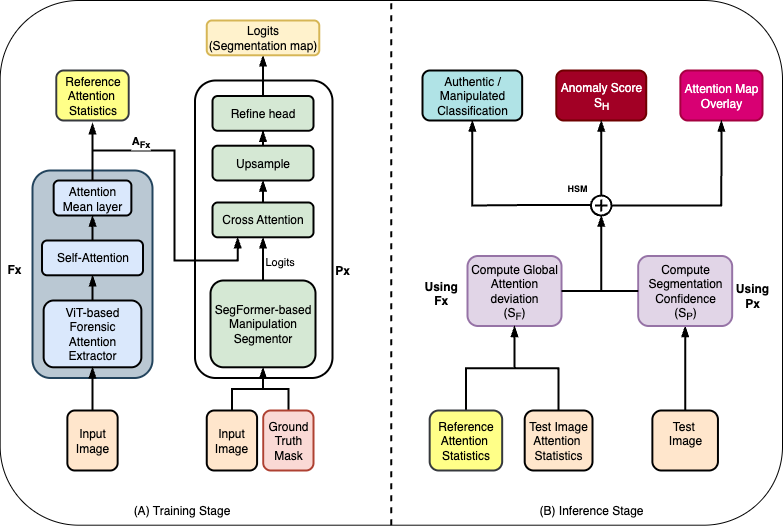

VAAS (Vision-Attention Anomaly Scoring) is a dual-module vision framework for image anomaly detection and localization. It combines global attention-based reasoning with patch-level self-consistency analysis to produce a continuous, interpretable anomaly score alongside dense spatial anomaly maps. Rather than making binary decisions, VAAS estimates where anomalies occur and how strongly they deviate from learned visual regularities, enabling explainable image analysis and integrity assessment.

Examples of detection and scoring

Read Research Paper

Architecture Overview

VAAS consists of two complementary components:

Global Attention Module (Fx)

A Vision Transformer backbone that captures global semantic and structural irregularities using attention distributions.Patch-Level Module (Px)

A SegFormer-based segmentation model that identifies local inconsistencies in texture, boundaries, and regions.

These components are combined via a hybrid scoring mechanism:

S_F: Global attention fidelity scoreS_P: Patch-level plausibility scoreS_H: Final hybrid anomaly score

S_H provides a continuous measure of anomaly intensity rather than a binary decision.

Installation

VAAS is distributed as a lightweight inference library and can be installed instantly.

PyTorch is only required when executing inference or loading pretrained VAAS models.

This allows users to inspect, install, and integrate VAAS without heavy dependencies.

This model was produced using vaas==0.1.7, but newer versions of VAAS may also be compatible for inference.

1. Install PyTorch

To run inference or load pretrained VAAS models, install PyTorch and torchvision for your system (CPU or GPU). Follow the official PyTorch installation guide for your platform:

https://pytorch.org/get-started/locally/

Quick installation (CPU)

pip install torch torchvision

2. Install VAAS

pip install vaas

VAAS will automatically detect PyTorch at runtime and raise a clear error if it is missing.

Usage

Try VAAS instantly on Google Colab (no setup required):

The notebooks cover:

- 01_detecting_image_manipulation_quick_start.ipynb

- 02_where_was_the_image_manipulated.ipynb

- 03_understanding_vaas_scores_sf_sp_sh.ipynb

- 04_effect_of_alpha_on_anomaly_scoring.ipynb

- 05_running_vaas_on_cpu_cuda_mps.ipynb

- 06_loading_vaas_models_from_huggingface.ipynb

- 07_batch_analysis_with_vaas_folder_workflow.ipynb

- 08_ranking_images_by_visual_suspicion.ipynb

- 09_using_vaas_outputs_in_downstream_research.ipynb

- 10_known_limitations_and_future_research_directions.ipynb

1. Quick start: run VAAS and get a visual result

The fastest way to verify VAAS is working is to generate a visualization from a single image.

from vaas.inference.pipeline import VAASPipeline

from PIL import Image

import requests

from io import BytesIO

pipeline = VAASPipeline.from_pretrained(

repo_id = "OBA-Research/vaas",

device="cpu",

alpha=0.5,

model_variant = "v1-base-df2023" # v1-medium-df2023 and v1-large-df2023 are other model variants you can use.

)

# # Option A: Using a local image

# image = Image.open("example.jpg").convert("RGB")

# result = pipeline(image)

# Option B: Using an online image

url = "https://raw.githubusercontent.com/OBA-Research/VAAS/main/examples/images/COCO_DF_C110B00000_00539519.jpg"

image = Image.open(BytesIO(requests.get(url).content)).convert("RGB")

pipeline.visualize(

image=image,

save_path="vaas_visualization.png",

mode="all", # "all", "px", "binary", "fx"

threshold=0.5,

)

This produces a single figure containing:

- The original image

- Patch-level anomaly heatmaps (Px)

- Global attention overlays (Fx)

- A gauge showing the hybrid anomaly score (S_H)

The output is saved to vaas_visualization.png.

2. Programmatic inference (scores + anomaly map)

For numerical outputs and downstream processing, call the pipeline directly:

result = pipeline(image)

print(result)

anomaly_map = result["anomaly_map"]

Output format

{

"S_F": float, # global attention score

"S_P": float, # patch consistency score

"S_H": float, # hybrid anomaly score

"anomaly_map": ndarray # shape (224, 224)

}

Model Variants (Planned & Released)

| Models | Training Data | Description | Reported Evaluation (Paper) | Hugging Face Model |

|---|---|---|---|---|

| vaas-v1-base-df2023 | DF2023 (10%) | Initial public inference release | F1 & IoU are reported in research paper | vaas-v1-base-df2023 |

| vaas-v1-medium-df2023 | DF2023 (≈50%) | Scale-up experiment | 5% better than base | vaas-v1-medium-df2023 |

| vaas-v1-large-df2023 | DF2023 (100%) | Full-dataset training | 9% better than medium | vaas-v1-large-df2023 |

| v4 | DF2023 + Others | Cross-dataset study (planned) | Cross-dataset eval planned | TBD |

| v5 | Other datasets | Exploratory generalisation study | TBD | TBD |

These planned variants aim to study the effect of training scale, dataset diversity, and cross-dataset benchmarking on generalisation and score calibration.

Notes

- VAAS supports both local and online images

- PyTorch is loaded lazily and only required at runtime

- CPU inference is supported; GPU accelerates execution but is optional

Intended Use

This model can be used for:

- Image anomaly detection

- Visual integrity assessment

- Explainable inspection of irregular regions

- Research on attention-based anomaly scoring

- Prototyping anomaly-aware vision systems

Limitations

- Trained on a single dataset

- Does not classify anomaly types

- Performance may degrade on out-of-distribution imagery

Ethical Considerations

VAAS is intended for research and inspection purposes. It should not be used as a standalone decision-making system in high-stakes or sensitive applications without human oversight.

Citation

If you use VAAS in your research, please cite both the software and the associated paper as appropriate.

@software{vaas,

title = {VAAS: Vision-Attention Anomaly Scoring},

author = {Bamigbade, Opeyemi and Scanlon, Mark and Sheppard, John},

year = {2025},

publisher = {Zenodo},

doi = {10.5281/zenodo.18064355},

url = {https://doi.org/10.5281/zenodo.18064355}

}

@article{bamigbade2025vaas,

title={VAAS: Vision-Attention Anomaly Scoring for Image Manipulation Detection in Digital Forensics},

author={Bamigbade, Opeyemi and Scanlon, Mark and Sheppard, John},

journal={arXiv preprint arXiv:2512.15512},

year={2025}

}

Contributing

We welcome contributions that improve the usability, robustness, and extensibility of VAAS.

Please see the full guidelines on Github in CONTRIBUTING.md.

License

MIT License

Maintainers

OBA-Research

- Downloads last month

- 45